In this article we are going to learn how to configure blob storage. Basically we will be learning these things

- Identify features and usage cases for Azure Blob storage.

- Configure Blob storage and Blob access tiers.

- Configure Blob lifecycle management rules.

- Configure Blob object replication.

- Upload and price Blob storage.

What is blob storage?

Azure Blob storage is Microsoft’s cloud object storage service. Blob storage is designed to store large amounts of unstructured data. Unstructured data, such as text or binary data, does not correspond to a certain data model or definition.

How to implement blob storage

Any type of text or binary data, such as a document, media file, or application installer, can be stored in blob storage. Object storage is another name for blob storage.

Blob storage is commonly used for the following purposes:

- Directly serving pictures or documents to a browser.

- Storing files for distributed access, such as installation.

- Video and audio are streamed.

- Backing up and restoring data, as well as disaster recovery and archiving.

- Using an on-premises or Azure-hosted service to store data for analysis

Blob service resources

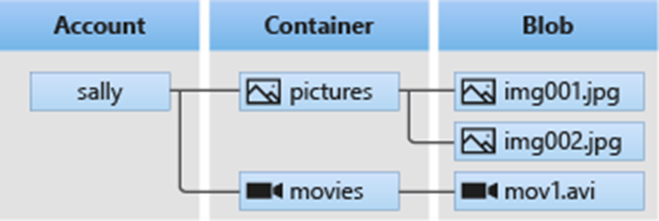

There are three types of resources available in blob storage:

- The account for storing data

- In the storage account, there are containers.

- In a container, there are blobs.

The link between these resources is shown in the diagram below.

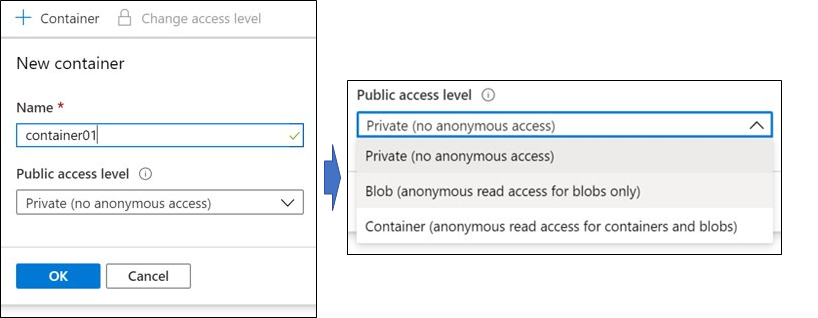

How to create blob containers

A container is a place to store a collection of blobs. A container is required for all blobs. When you create an account, you can add as many containers as you want. There is no limit to the amount of blobs that can be stored in a container. And the container can be created through the Azure interface.

When you create the name, make sure the name only contains lowercase letters, numbers, and hyphens, and that it starts with a letter or a number. In addition, the name must be between 3 and 63 characters long.

Public access level means whether or not the data in the container can be accessible by the public. The account owner has access to container data by default.

For security follow these.

- Use Private to prevent anonymous access to the container and blobs,.

- Blob can be used to grant anonymous public read access to blobs alone.

- Allow anonymous public read and list access to the full container, including the blobs, by using Container.

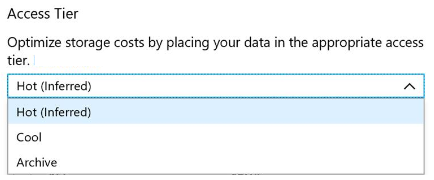

How to create blob access tiers

Based on usage patterns, Azure Storage provides multiple solutions for accessing block blob data. Each Azure Storage access tier is created for a specific data usage pattern. You can store your block blob data in the most cost-effective way by selecting the appropriate access tier for your purposes.

Hot: The Hot tier is designed for frequent access to storage account objects. By default, new storage accounts are placed in the Hot tier.

Cool: The Cool tier is best for storing large amounts of data that will be accessed infrequently and kept for at least 30 days. Although storing data in the Cool tier is less expensive, accessing it may be more costly than accessing data in the Hot tier.

Archive: The Archive tier is designed for data that can survive multiple hours of retrieval latency and will be kept for at least 180 days. Although keeping data in the Archive tier is the most cost-effective option, accessing that data is more expensive than accessing it in the Hot or Cool tiers.

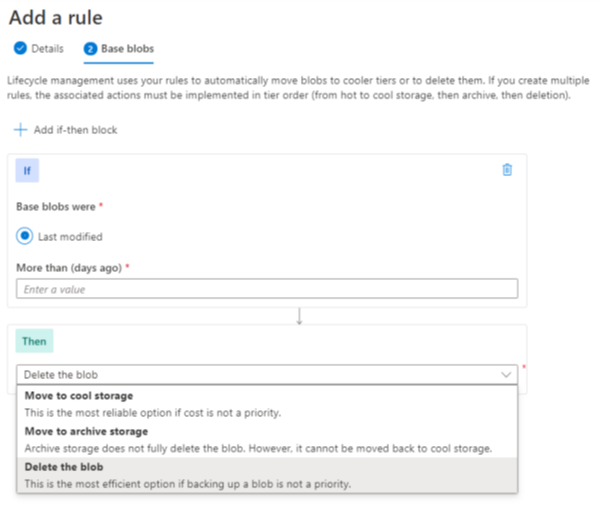

How to add blob lifecycle management rules

The life cycles of data sets are different. Some data stays on the cloud, unused, and is rarely accessible once it is there. Some data sets are read and modified for a few days or months after they are created, while others are actively read and modified throughout the rest of their lives. To manage this Azure Blob storage lifecycle management provides a robust, rule-based policy for GPv2 and Blob storage accounts.

You can use the lifecycle management policy to:

- To maximize performance and cost, move blobs to a cooler storage tier (hot to cool, hot to archive, or cool to archive).

- At the end of their lifecycles, delete blobs.

- At the storage account level, create rules that will run once a day.

- Apply rules to a subset of blobs or containers.

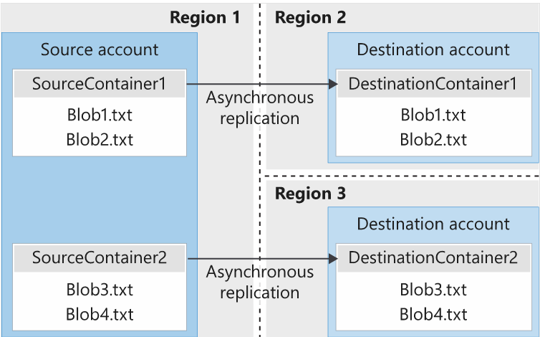

How to determine blob object replication

Usually Object replication duplicates block blobs in a container asynchronously according to rules you define. The blob’s contents, any related versions, as well as the blob’s metadata and characteristics, are all copied from the source container to the destination container.

There are some scenarios where this happens.

Minimizing latency: Object replication can reduce read latency by allowing clients to consume data from a region that is physically closer to them.

Increase computational workload efficiency: Compute workloads can analyze the identical sets of block blobs in separate locations thanks to object replication.

Optimizing data distribution: You can process or analyze data in one place and then replicate only the results to other areas.

Optimizing costs: After your data has been replicated, use life-cycle management policies to move it to the archive layer to save money.

How to upload blobs

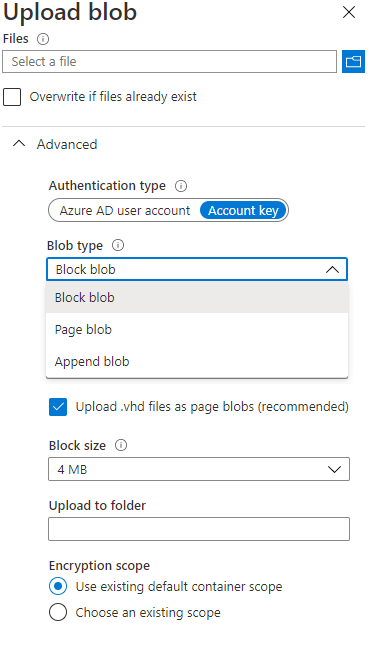

A blob can be any type of file of any size. Block blobs, page blobs, and append blobs are the three types of blobs available in Azure Storage. When you create a blob, you can define the blob type and access tier.

- Block blobs (default): consist of data blocks joined together to form a blob. Block blobs are used in the majority of Blob storage scenarios. Text and binary data, such as files, photos, and movies, are best stored on the cloud using block blobs.

- Append blobs: They’re similar to block blobs in that they’re built up of blocks, but they’re optimized for append operations, so they’re good for logging.

- Page blobs: They can hold up to 8 TB of data and are more efficient for read/write operations. Page blobs are used as the operating system and data drives in Azure virtual machines.

Blob upload tools

There are several ways to upload data to blob storage, including the ones listed below:

AzCopy: a simple command-line utility for Windows and Linux that moves data to and from Blob storage, across containers, and between storage accounts

Azure Storage Data Movement library: a.NET library that allows you to move data between Azure Storage services The Data Movement library is used to create the AzCopy tool.

Azure Data Factory: uses the account key, shared access signature, service principal, or managed identities for Azure resource authentications to copy data to and from Blob storage.

Blobfuse: For Azure Blob storage, a virtual file system driver is available. Through the Linux file system, you can use blobfuse to access your existing block blob data in your Storage account.

Azure Data Box Disk: When big datasets or network constraints make uploading data over the wire impractical, a solution for transferring on-premises data to Blob storage is available. Solid-state disks (SSDs) can be requested from Microsoft using Azure Data Box Disk. After that, you can copy your data to those drives and return them to Microsoft to be uploaded to Blob storage.

Azure Import/Export service: allows you to export huge amounts of data from your storage account to hard disks that you supply, which Microsoft then returns to you with your data.

Storage pricing

For blob storage, all storage accounts use a price scheme based on the tier of each blob. The following billing factors apply when using a storage account:

Performance tiers: The storage tier defines how much data is stored and how much it costs to store it. The cost per gigabyte falls as the performance tier grows cooler.

Data access costs: As the tier gets colder, data access rates rise. You are charged a per-gigabyte data access charge for reads on data in the cool and archive storage tiers.

Transaction costs: For all tiers, there is a per-transaction fee. As the tier becomes cooler, the charge rises.

Geo-Replication data transfer costs: This fee is only applied to accounts that have geo-replication enabled, such as GRS and RA-GRS. Data transport for geo-replication is charged per gigabyte.

Outbound data transfer costs: Outbound data transfers (data that is sent out of an Azure area) are charged per gigabyte of bandwidth used. This is how general-purpose storage accounts are billed.

Changing the storage tier: A charge equal to reading all the data in the storage account is incurred when changing the account storage tier from cool to hot. Changing the account storage tier from hot to cool, on the other hand, incurs a fee equivalent to the cost of writing all the data into the cool tier (GPv2 accounts only)

So we learnt what Azure Blob storage is, how it is ideal for storing massive amounts of unstructured data, and how to configure blob storage with price details in this article.